Earlier this month, I had dozens of conversations at Snowflake Summit with engineering and data leaders building and evaluating the next generation of AI-native products. Coming out of the event, one thing is clearer to me than ever: successful AI deployments run on metadata.

Everyone’s chasing AI speed, automation, and integration. But the teams that are set up for success are the ones realizing that the quality of their AI depends on the quality of their metadata.

In nearly every conversation, the same needs came up:

- Data platform and ML engineering teams are looking for metadata control planes; a server-style architecture that lets them manage and govern metadata centrally and programmatically.

- They expect automated AI workflows that eliminate the manual burden of documenting data, models, and pipelines.

- They value API-first and automated lineage, paired with foundational tools like ERDs, to provide both programmatic access and visual clarity across downstream tools and systems.

These are fast-moving data and AI teams who treat metadata like infrastructure. And they’re turning to Secoda.

We’re seeing teams at Vanta, Hippo, Dialpad, and Cashea use Secoda as a core part of their AI architecture. They weren’t just looking for a traditional, static catalog; they needed dynamic metadata that could fuel conversational AI and automation.

That’s exactly what Secoda was built for. Through ongoing investment in AI infrastructure, we deliver automated, contextual metadata that makes data AI-ready from day one. Here are some of those recent features and improvements:

Smarter AI with workspace-deep context

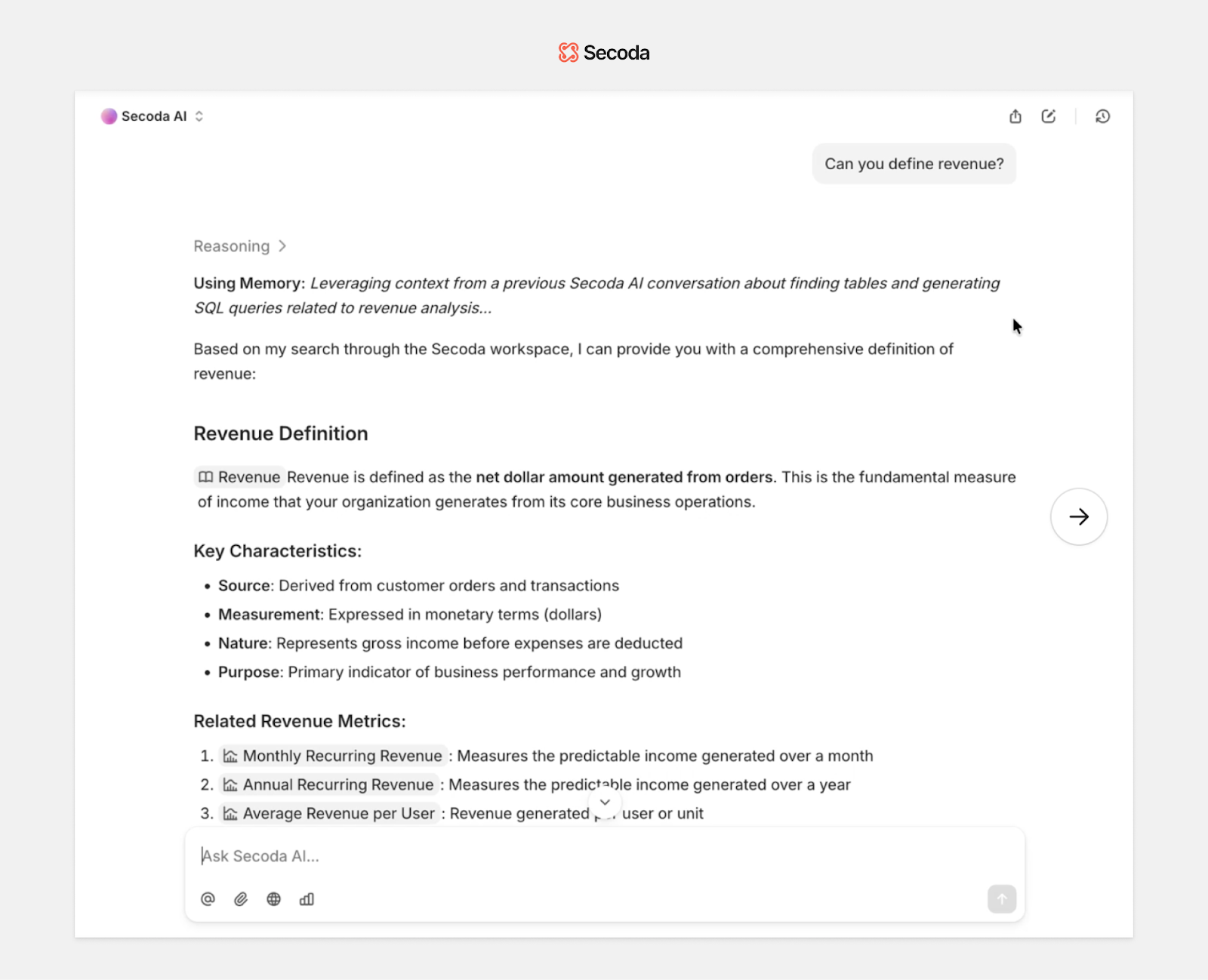

Secoda AI is a context-aware assistant built specifically for data teams managing sophisticated data ecosystems. With advanced memory, Secoda AI learns how your team works by observing how tools are used, which patterns produce results, and where users typically get stuck. Secoda AI now fine-tunes its understanding of your team’s unique SQL syntax, naming conventions and query structures. By observing query patterns, transformation logic, common joins, error points, and frequently accessed tables or columns, Secoda AI builds a contextualized knowledge graph of your data environment. This allows it to provide increasingly relevant query suggestions, autocomplete options, documentation enrichment, and troubleshooting guidance that reflect how your team actually works with your data stack.

Each interaction contributes to a growing knowledge base tailored to your workspace. It tracks documentation activity, tool sequences, and user behavior to improve the accuracy and relevance of its responses. Over time, this results in faster answers and smarter suggestions.

Secoda AI also incorporates data quality monitors, governance policies, and saved queries to ground its answers in your actual metadata. This includes lineage, ownership, usage patterns, and documentation. The result is an assistant that understands your data's history, structure, and context, rather than one that relies on surface-level keyword matching.

As usage increases, Secoda AI adapts to your preferences and past interactions. This ongoing learning process allows it to respond more personally and helpfully, becoming a reliable partner for your team’s data questions and documentation workflows.

AI-native workflows

Of course, managing metadata like code also means automating the tedious work that typically slows teams down. Secoda’s AI-native features are designed to automate the most time-consuming work:

- AI Questions workflow: A streamlined Q&A workflow that lets users ask questions, see how AI reached its answer, flag issues for human review, and continuously improve response quality through real-time feedback.

- AI documentation editor: Generates definitions and docs for your core data assets with the click of a button, no writing required. Leverage custom instructions and agents to follow documentation standards and best practices.

- Support for Model Context Protocol (MCP): Secure, standardized interface that lets AI tools like Cursor and Claude access your governed metadata like lineage, glossary terms, documentation, and more, directly from Secoda. MCP turns Secoda into a source of truth for AI agents, enabling trusted, permission-aware context wherever your team works.

Open APIs, ERDs, and integrated lineage

Finally, for metadata to truly operate as infrastructure, it must be accessible, extensible, and connected across systems. That’s why we recently released Entity Relationship Diagrams (ERDs), a highly requested feature, that gives teams even more coverage of their data ecosystem. ERDs offer a visual layer of understanding that helps teams quickly grasp how their tables are structured and connected, making onboarding easier and schema design more transparent.

Combined with our automated lineage and open APIs, ERDs help paint a more complete picture of your data ecosystem, one that can be consumed by both people and systems. These kinds of foundational tools are critical for teams working at the speed AI demands.

Final thoughts

We’re bringing that same philosophy to metadata.

If you're building AI infrastructure, metadata needs to be part of the foundation. How it's managed, how it flows through your systems, and how it supports decisions all influence whether AI is reliable and ready for production.

At Secoda, we believe metadata should be treated as a first-class citizen in any AI stack. We're building the platform that sets the standard for how teams govern and use metadata at scale.

Etai Mizrahi

Co-founder & CEO, Secoda

.png)

.png)